Strands2Cards: Automatic Generation of Hair Cards from Strands

Abstract

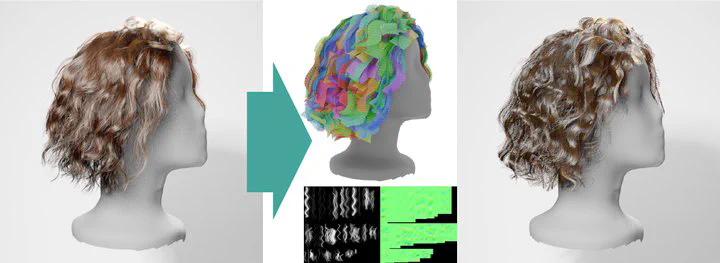

We present a method for automatically converting strand-based hair models into an efficient mesh-based representation, known as hair cards, for real-time rendering. Our method takes strands as inputs and outputs polygon strips with semi-transparent texture, preserving the appearance of the original strand-based hairstyle. To achieve this, we first cluster strands into groups, referred to as wisps, and generate hairstyle-preserving texture maps for each wisp by skinning-based alignment of the strands into a normalized pose in UV space. These textures can further be shared among similar wisps to better utilize the limited texture resolution. Next, polygon strips are fitted to the clustered strands via tailored differentiable rendering that can optimize transparent cluster-colored coverage masks. The proposed method successfully handles a wide range of hair models and outperforms existing approaches in representing volumetric hairstyles such as curly and wavy ones.